The Rise of AI Computing Motherboards: What You Need to Know

Artificial intelligence is no longer limited to massive cloud data centers. Today, AI is rapidly expanding into edge computing applications — from autonomous vehicles and robotics to smart retail solutions and industrial automation. Driving this transformation is a new generation of hardware: AI computing motherboards for edge AI.

These specialized AI motherboards are engineered to handle machine learning inference, computer vision, and real-time data analytics directly at the source, without relying on constant cloud connectivity. In this guide, we’ll break down what an AI computing motherboard is, why it’s critical for next-generation AI deployment, and how to choose the right board for your business or industry application.

What Is an AI Computing Motherboard?

An embedded system board designed especially for workloads involving artificial intelligence is known as an AI computing motherboard. AI computing motherboards incorporate specialized AI processors like the following, in contrast to conventional motherboards that mostly use CPUs:

- NPU (Neural Processing Unit) – optimized for deep learning at the edge

- TPU (Tensor Processing Unit) – designed for tensor-level machine learning inference

- Embedded GPUs from NVIDIA, AMD, or ARM – ideal for parallel computing, AI acceleration, and computer vision

By leveraging these processors, AI motherboards deliver high-performance edge AI capabilities that accelerate tasks like image recognition, predictive analytics, speech and natural language processing (NLP), and real-time decision-making — all without depending on continuous cloud connectivity.

Why AI Workloads Need Specialized Hardware

Running AI models locally — especially in real-time applications — demands more than raw CPU power. It requires:

- High-throughput parallel processing for deep learning

- Low-latency inference for time-critical tasks (e.g., autonomous driving)

- Efficient I/O for handling cameras, sensors, and industrial devices

Standard PC motherboards aren’t optimized for these needs. AI computing motherboards close this performance gap, making them vital for robotics, smart surveillance, digital signage, and healthcare AI solutions.

According to MarketsandMarkets (2023), AI inference at the edge is projected to grow at a 26% CAGR through 2028.

Key Features of Modern AI Motherboards

1. Integrated AI Acceleration (GPU/NPU/TPU)

Modern AI boards ship with on-chip AI accelerators that drastically boost inference performance.

| Accelerator Type | Example Vendor | Best Use Case | Key Advantage |

| NPU | Rockchip, Hailo | Vision processing, IoT | Ultra-low power AI inference |

| GPU | NVIDIA, AMD | AI training, 4K rendering | High-parallel workloads |

| TPU | Google Edge | ML model inference | Tensor-level optimization |

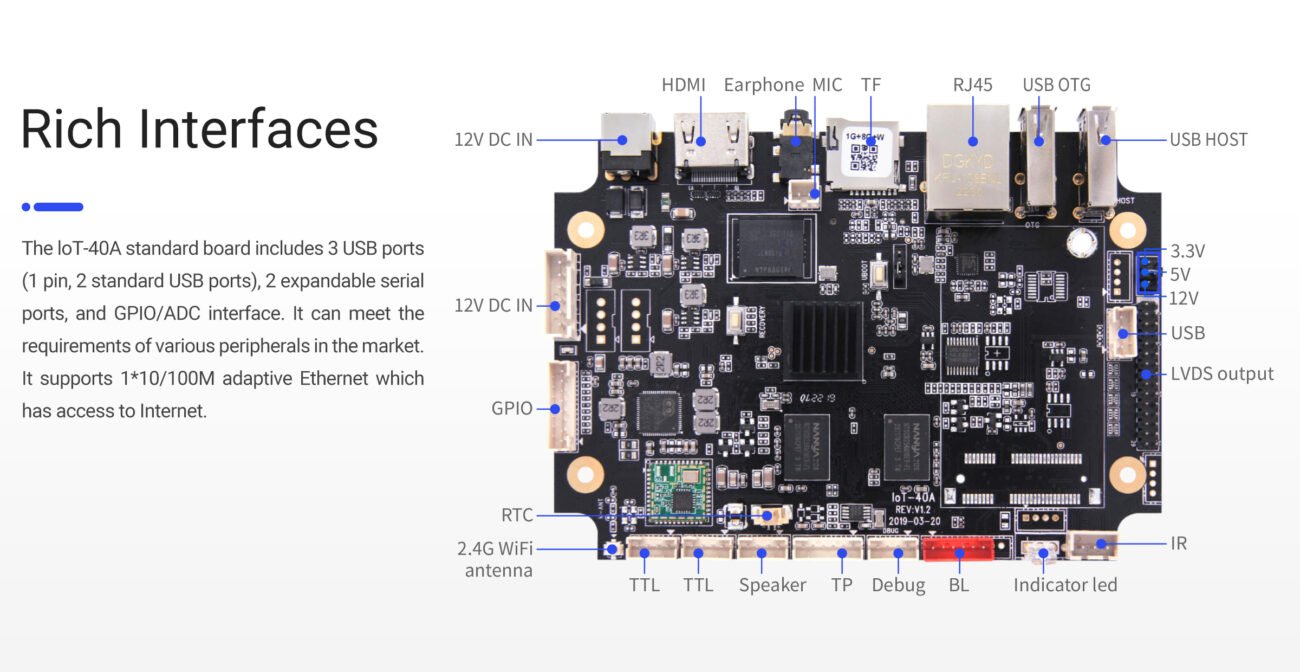

2. I/O and Connectivity for AI Applications

AI deployments often involve multi-device input/output. Leading AI motherboards support:

- USB 3.0 / Type-C for high-speed cameras and sensors

- Gigabit Ethernet / PoE for networking and edge devices

- COM and GPIO for industrial automation

- HDMI / eDP / LVDS outputs for HMI and vision systems

Example use case: Smart Retail Solutions with AI Vision System

3. Power Efficiency and Thermal Design

Edge AI requires energy-efficient, rugged solutions. Industrial AI motherboards are engineered to:

- Run fanlessly in dust-prone environments

- Operate at low power (10–25W typical)

- Support wide temperature ranges (-20°C to +60°C)

At ShiMeta Devices, we offer fanless AI motherboards with extended lifecycles, ideal for embedded OEMs and industrial AI integration.

Real-World Applications of AI Motherboards

- Smart Cities & Transportation: traffic optimization, ANPR, public safety monitoring

- Retail & Advertising: dynamic digital signage, customer analytics, inventory tracking

- Industrial Automation: machine vision QC, predictive maintenance, autonomous robots

- Healthcare & Diagnostics: medical image AI, telehealth kiosks, patient monitoring

How to Choose the Right AI Motherboard

When selecting an AI motherboard for edge deployment, evaluate:

- AI Accelerator: NPU, GPU, or TPU depending on workload

- Memory & Storage: 4GB–16GB RAM, NVMe/eMMC storage options

- Connectivity: PCIe, M.2, GPIO expansion

- Video Outputs: HDMI, eDP, LVDS

- Ecosystem Support: TensorFlow, PyTorch, OpenVINO, CUDA

Pro Tip: Always ensure compatibility with Linux SDKs for AI, Android NNAPI, and OTA update support for long-term scalability.

Industrial vs. Consumer-Grade AI Boards

| Feature | Consumer-Grade | Industrial-Grade |

| Temperature Range | Limited | Extended (-20°C to +60°C) |

| Lifecycle | 1–2 years | 5–7 years guaranteed |

| Reliability | Moderate | EMC/ESD certified |

| Support | Minimal | Full OEM support + RMA |

For mission-critical projects, industrial AI motherboards deliver higher stability, compliance, and ROI.

Why Choose ShiMeta AI Motherboards?

At ShiMeta Devices, we design AI computing motherboards optimized for OEMs, robotics, healthcare, and industrial automation.

Our solutions feature:

- Embedded NPUs or NVIDIA Jetson modules

- Compact, fanless industrial design

- Custom Android & Linux OS builds

- ISO 9001-certified global manufacturing

Explore ShiMeta AI Computing Motherboards

Request a Custom Quote Today

Conclusion

Edge computing, where real-time processing takes place locally on the device rather than relying on the cloud, is the way of the future for AI. AI computing motherboards are at the center of this revolution, serving as the basis for edge machine learning, speech recognition, computer vision, and predictive analytics.

Whether you are creating intelligent retail solutions, healthcare AI systems, smart kiosks, or autonomous robots, selecting the appropriate AI motherboard for edge AI applications is essential. A critical component of next-generation AI projects’ success, the correct hardware guarantees optimal performance, system stability, and long-term return on investment.